![]() This work is licensed under the

Creative

Commons Attribution 2.0 License You are free to copy, distribute, and

display the work, and to make derivative works (including translations). If you

do, you must give the original author credit. The author specifically permits

(and encourages) teachers to post, reproduce, and distribute some or all of

this material for use in their classes or by their students.

This work is licensed under the

Creative

Commons Attribution 2.0 License You are free to copy, distribute, and

display the work, and to make derivative works (including translations). If you

do, you must give the original author credit. The author specifically permits

(and encourages) teachers to post, reproduce, and distribute some or all of

this material for use in their classes or by their students.

What's wrong with chocolate?

At one level, of course, nothing at all is wrong with chocolate. There aren't many essential nutrients in chocolate, of course, but in and of itself that is not a problem. Consumed in moderation, as part of a well-rounded diet, chocolate adds variety and pleasure to the diet, and in some cases may provided needed calories.

But of course, that isn't the end of the story. What's wrong with chocolate is that it is delicious. Addictive, in fact! There was a reason for the invention of the word chocoholic. Where moderate consumption might be useful, chocolate tempts us to immoderate consumption. Where we should consume a variety of foods to obtain a balanced intake of nutrients, chocolate tempts us to ignore other foods and eat only chocolate. If we succumb to its charms, we eat an unbalanced diet. If we eat an unbalance diet, we suffer. The consequences of excessive chocolate consumption are very real and easy to see (on the bathroom scale, for instance). But (and here is part of the problem) the causal connection between chocolate consumption and its consequences is (in contrast to chocolate's easily-perceived immediate charms) virtually invisible.

The problem with chocolate, then, lies in a combination of things: it does not provide a complete, balanced nutritional intake; it is extremely attractive, tempting one to consume only chocolate and ignore other foods; and it is very difficult to make the connection between excessive chocolate consumption and its undesirable consequences.

Use Cases have the same problems, caused by the same combination of characteristics:

In fairness, it should be noted that the Use Case approach is not the culprit here. The connection between the quality of the requirements-gathering for a system, and the quality of the system that is eventually developed, seems to be invisible to most organizations regardless of whatever particular requirements-gathering methodology the organization is officially practicing. That's part of the reason why we -- as a profession and as an industry -- continue to do such a poor job of requirements gathering, and continue to have so many system failures.

In short, the analog of the Chocoholic's Diet (in which the unfortunate dieter consumes only, or mostly, chocolate) is what we might call the Use Case Approach (UCA) in which the unfortunate software development organization uses only, or mainly, Use Cases to gather and document client requirements. We have been warned for years, by everyone from the government to our own doctors, about the dangers of junk food diets, of which the Chocoholic's Diet is an example. Now it is time to issue a warning about the dangers of junk requirements-gathering methodologies, of which the Use Case Approach is an example.

The time has come to issue such a warning because the Use Case Approach is quickly gaining in popularity in software development shops. In most cases, UCA is riding on the coat-tails of UML. There are some UML proponents who advocate the requirements-gathering equivalent of a balanced diet: a requirements-gathering methodology in which Use Cases figure as only one element of a well-rounded diet of requirements-gathering techniques. But for every such reasonable guru, there is at least one snake-oil salesman pushing Use Cases as The Solution For All Your Requirements Methodology Needs. Some of these gentlemen give lip service to the idea of a well-balance diet, but it is only lip service. In their methodology books and courses, they pause briefly to give a cursory nod to (for instance) describing the problem domain, before spending the overwhelming bulk of discussion on Use Cases.

Unfortunately, the software development community seems to be eating it up. Many organizations see UCA as a silver bullet for their biggest requirements gathering problem... which, incidently, seems often not to be How do you gather requirements effectively?, but What do you say when somebody asks you what your official requirements gathering methodology is? It used to be said that no one ever lost their job by buying IBM; today, no one ever loses their job by adopting Use Cases and UML.

This stampede of enthusiasm has produced the software development equivalent of a national health crisis. Just as the popularity of the "Sugar Buster's Diet" has forced doctors and dietitians to issue warnings about the nutritional deficiencies of such a diet, the popularity of the Use Case Approach makes it imperative to point out the deficiencies of Use Cases as a requirements-gathering tool. That's the purpose of this article.

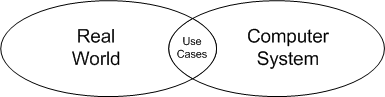

When we embark on a system development project, the system to be developed is the putative solution to some problem in the user's environment (the "application domain").

Naturally, we would expect the problem-solving method to look something like this:

A Use Case — as a description of an actor's interaction with the system-to-be — is both a description of the system's user interface and an indirect description of some function that the system will provide. In short, as descriptions of the system-to-be, Use Cases belong in step 2 -- describing the proposed solution to the problem. So the development of Use Cases has a place in the problem solving process... but that place is not as the first step, and it is not as the only step.

The first activity in the requirements-gathering process must be the study and description of the problem-environment, the application domain. To put it bluntly, the requirements analyst's first job is to study and understand the problem, not to jump right in and start proposing a solution. (This is a major theme of Michael Jackson's 1995 book, System Requirements and Specifications and his new book, Problem Frames. In System Requirements and Specifications, see especially the entry on "The Problem Context".)

One way that the problem domain can be described is by creating a model. The developer begins by creating a model of the "real world", i.e. the part of the real world that is relevant to the problem at hand, the part of reality with which the system is concerned and which furnishes its subject matter.

We start by modeling the real world (rather than describing the functionality that we wish the system-to-be to provide) because the model supplies essential components that we need in order to create our descriptions of the system functionality. In describing a university library application, for example, in the real-world model we would describe books and copies of books, describe what counts as being a university member for the purposes of using the library, and so on. In creating the domain model we, in a sense, construct a dictionary of words. We can then use those words when we write our descriptions of the functions and Use Cases that we wish the system to support. In the case of the university library, once we have described the objects in the model, we can specify any system functions that can be described using a vocabulary of "books", "copies of books", and "university members".

Note that the scope of the vocabulary that was created in the domain model implicitly defines (and defines the limits of) a set of possible system functions. [Jackson, 1983, p. 64] We can specify any function that can be described using the vocabulary of words that appear in the model/dictionary, but we cannot specify a function if its description would require terms that are not in the model. For instance, we can describe the process of a member borrowing a book and the process of returning it, the process of reserving a book and the process of notifying a member when a reserved book becomes available for checkout, the process of sending out overdue notices, and so on. But we can not describe a Use Case in which a university member presses a button that triggers the rocket launch of a weather satellite into orbit. "Rocket", "satellite", and "launch" were not part of the conceptual vocabulary that was created in our domain description of the university library.

Note that the model of reality isn't just something that is nice to have in order to support the descriptions of the Use Cases. It is an essential foundation for those descriptions. If we produce just the Use Cases without first creating the description of the problem domain, then the descriptions of the Use Cases are fundamentally flawed by using undefined terms. A Use Case description for Member Checks Out a Book will use the terms "library member" and "book", but if those terms have not been defined earlier (in the model of the application domain), then the Use Case specification is necessarily vague (i.e. not clearly defined).

Consider the term "book". In this context, the term "book" is ambiguous between book as a work of art, which has no physical location, and book as a physical object (a "copy of a book") that does. Only after we have disambiguated the word "book", can we explain, for instance, why the book involved in the Member Checks Out Book Use Case is not the same as the book in the Member Reserves Book Use Case. I often use the university library as a teaching example in my data modeling classes, just because of this ambiguity with the word "book". It exposes the students to the issue of ambiguity in domain descriptions, and helps me make the point that one of the requirements analyst's most important tasks is the detection and removal of such ambiguities.

Such ambiguities are quite real, and quite common. By now, you would expect that we would have recognized that fact, and have learned to deal with it. Yet one of the most common causes for major project problems is the failure of developers and their clients seriously to consider that ambiguity might exist in their requirements documents. Clients and developers tend to think that their primary job on the project is to describe the "requirements" for the system (that is, to describe what the system is supposed to do, the solution to the problem), not to describe the problem or the problem domain. Project participants often simply assume that the meanings of familiar terms are so clear and so well known to everyone present, that no explicit definition is necessary. This assumption is often false, and when it is false, the consequence is that significant ambiguities in the system specification remain hidden until they emerge and plague the later stages of the development project. In a case that I heard about recently, a requirements analyst was working on a project for a large American railroad corporation. He was having problems capturing the requirements because his users did not use the word "train" consistently. To some of them, a "train" was a particular collection of rolling stock (a locomotive and all the cars it pulled). To others, a "train" was just the locomotive. To others, a "train" was a regularly scheduled run, as in "I'll catch the 6 o'clock train to Boston". To others, a "train" was a specific instance of a regularly scheduled run, so that the train that left for Boston today at 6 o'clock, and the train that left for Boston yesterday at the same time, are two different trains. And so on. In this case, fortunately, the ambiguities were so glaring that they could not be ignored. On many projects the ambiguities are not so intrusive, so they are left hidden, like ticking time bombs.

Another reason that we start development with capturing information about the real world is there are properties of the real world that constrain the system, or that the system must know about, or that the system relies on, in order to satisfy the customer's requirements. Here, the downside of the Use-Case approach is that it draws the requirements analyst's attention away from the task of describing properties of the real world, and focuses his attention on the narrow area where the real world interacts directly with the system.

In embedded systems, the system's reliance on properties of the surrounding real world are very real, and can often be safety-critical. In Software Requirements and Specifications (entry "Requirements") Michael Jackson describes an incident in which an airplane overshot the runway when attempting to land. The runway was wet, and the plane's wheels were aquaplaning instead of turning. The plane's guidance system thought, in effect, "I'm on the ground, but my wheels aren't turning. So I must not be moving," and would not allow the pilot to engage reverse thrust. Aquaplaning, a very relevant property of the real world, was not considered by the developers when they created the guidance system. The consequence is a plane in a ditch past the end of the runway, instead of safely docked in the terminal. (Jackson says that the error, which could have been catastrophic, fortunately was not.)

For computer professionals, it is tempting to blame such problems on the clients. Knowing about aquaplaning, we are tempted to say, is our client's problem. Our only job is to figure out how to make the system do what they tell us they want it to do, in their Use Case descriptions. But even if our clients will let us get away with wiggling out of all responsibility for planes in ditches (something that seems extremely unlikely), this still won't cut it. As Jackson points out in a recent paper, it is almost impossible for software developers to build correct software if they don't understand the problem domain, and how what they are doing relates to what happens there.

One potentially attractive view, is that the concerns of computer science are bounded by the interface between the computer and the world outside it.... So if we restrict our concerns to the behaviour of the computer itself we can set aside the disagreeably complex and informal nature of the problem world. It is somebody else's task to grapple with that. ...

Unfortunately, ... the specification of computer behaviour at the interface, taken in isolation, is likely to be a description of arbitrary and therefore unintelligible behaviour. ... Practising programmers who try to adhere to this doctrine will find themselves devoting their skills to tasks that seem at best arbitrary and at worst senseless. [Italics mine -- SRF]

-- Michael Jackson, "The Real World"

Rephrasing this point in terms of Use Cases: it is almost impossible for programmers to build correct software if they are given Use Case information but no information about the problem domain and how their program relates to what happens there. Yet this is what the Use Case Approach encourages requirements analysts to do. This is the real problem with the Use Case Approach. It discourages the requirements analyst from examining the problem domain, by focusing attention only on what happens at the system boundary.

For some applications, there clearly is domain information that must be specified in the system requirements, but which has no natural home in any particular Use Case. Often, for example, an important requirement for a system is that it enforce (or at least not violate) a set of business rules or governmental regulations. Other systems (e.g. systems in the physical and social sciences) are heavily algorithm-driven. The Use Case Approach provides no natural mechanism for capturing such mathematical algorithms, business rules, and government regulations. Certainly, it is very unnatural to embed them in the descriptions of specific Use Cases. Specifying a single big module that contains all of the customer's business rules and (in UML terminology) extends every Use Case, is possible but clumsy and unnatural. Use Cases are simply not the best tool for capturing such requirements.

Nobody really knows what a Use Case description looks like. Use Cases can be written at a very high level of detail, or at a very low level, or anywhere in between.

In some organizations, Use Cases may be written at a very high level. Use Case descriptions that are written at too-high a level are often useless. Sometimes, they are worse than useless, because they give the impression that the system requirements have been completely specified, when in fact that is not true at all. A recent case in point was a project to develop a securities information system. The customers knew that they wanted the system to generate reports, so the system specification included a Use Case to Run Reports. The problems with this Use Case didn't emerge until the requirements analyst began to ask the customers about the contents of the reports that they wanted to generate. Then it emerged that the customer had in mind 140 reports of radically varying content, and the real requirement for the system -- what the customer really needed -- was a system that could store a history of all of the kinds of events that could affect securities. Virtually all of the system complexity, and all the information needed to design the system, was hidden under the single Use Case Run Reports.

In other organizations, Use Cases may be written at a detailed, implementation-specific level, describing the mechanics of the graphical user interface (GUI), complete with buttons, menus, and drop-down list boxes. Often, at a stage in the project when the primary concern should be understanding the business context and functions that the system must support, the client is instead engaging in premature user-interface design.

In addition, as the editor of www.uidesign.net observed, in many organizations the clients or end-users are the ones who write the Use Case descriptions. But without any training in user-interface design

... there is little hope that [the end-users] will make a good job of it. ... The adoption of Rational Unified Process in its complete form is likely to set the development of good User Interface Design back by perhaps 20 years.

The result, in short, is not only that the user-interface design is being done at the wrong time, it is being done by the wrong people.

An important feature of any useful requirements-gathering methodology is that it provides a systematic approach to identifying all of the system requirements. A requirements-gathering methodology that consists of writing Use Cases is no methodology at all, because it provides no help in systematically addressing the problem. The only guidance that the Use Case approach provides is the most generic question possible: "What would you like to do with the system, and how would you like it to behave?"

As Ben Kovitz points out, writing software requirements by writing Use Cases as they come to mind, is the requirements-writing equivalent of programming by hacking around. What it produces is simply a sprawl of Use Cases. How can you tell if you've identified all of the Use Cases for the system? How can you tell if the Use Cases conflict? How can you tell if the Use Cases leave any gaps? It is not possible for an ordinary human to understand all of the ways that a grab-bag of 50, or 100, or 200 Use Cases are inter-related. Suppose the customer wants the system to support some new functionality. How can you tell which, if any, Use Cases will be affected by the change? There simply are no answers to these questions.

In short, as a methodology the Use Case Approach is too mushy to provide any real guidance in gathering requirements. The Use Case Approach, in fact, is not a methodology at all; it is merely a notation. Once an organization has settled on the template that it wants to use for describing Use Cases, that's it. At that point, the organization has got all the methodology help that it is going to get out of the Use Case Approach.

There is really nothing at all that makes the Use Case Approach especially "object-oriented". Confined to describing behavior at the system boundary, a set of Use Cases describes neither objects in the real world nor objects inside the computer.

Because the Use Case Approach is not object-oriented, it is completely compatible with non-object oriented methods. As Grady Booch observed

[A] very real danger is that your development team will take [its] object-oriented scenarios and turn them into a very nonobject-oriented implementation. In more than one case, I've seen a project start with scenarios, only to end up with a functional architecture, because they treated each scenario independently, creating code for each thread of control represented by each scenario.

--entry "Scenarios" in [Booch, 1998]

There is nothing wrong with not being object-oriented, of course. If a technique is useful, it is useful, object-oriented or not. But given the popularity of the buzzword "object-oriented", I think it is important to point out that the object-oriented-ness of Use Cases is a myth. Use Cases are enjoying their current popularity because the Three Amigos bundled them into UML along with other, truly object-oriented, techniques. Whatever object-oriented-ness Use Cases have, they have acquired solely by rubbing up against true object-oriented methodologies into whose company they have fortuitously fallen.

The best metaphor for methodology skills is a handyman's toolbox. No handyman could be successful if he relied on a single tool (a hammer, say) for everything that he did. Hammers don't work well when you're trying to drive a screw, nor when you need to cut a plank. You need different tools for different jobs.

A software developer is a software handyman. To do everything that he needs to do, a software developer needs a toolbox that contains a variety of tools. Use Case description shouldn't be the only tool in the toolbox. It should simply be there along with the other tools, so that it is available when it is the right tool for the job.

Most of the problems with Use Cases that we've discussed, like the problems with chocolate, come not from the thing itself, but from its improper or excessive use. In spite of all of the problems we've discussed, Use Cases can be useful — when they are used properly. Most importantly, Use Cases can be an effective tool when they are developed in a disciplined manner, as part of a methodology that first creates a well-defined domain model.

The domain model provides an infrastructure for the requirements-gathering process, so that the development of Use Cases can proceed systematically, in a way that could never happen without the domain model. The domain model describes the objects in the problem domain, including the events in the lives of objects. Once the events in the lives of the objects have been described, the requirements analyst can approach the task of writing Use Cases in a systematic fashion, by writing a Use Case for each of the events. If the life of a library book includes events such as being acquired, being borrowed, being returned, etc., then the analyst will develop a Use Case for each of those events. Each Use Case answers questions derived from specific events: "How does the system get told that a book has been returned?" or "How does the system know when to generate overdue-notice letters?"

In actual practice, of course, deriving Use Cases from the domain model is rarely as simple and mechanical as we've just described. But there are techniques for dealing with more intricate situations. The whole purpose of systems analysis methodologies, in fact, is to provide guidance for situations in which the process is not so simple and mechanical. But whether the process of developing a particular Use Case is simple or tricky, its foundation must always lie in a solid understanding of the problem, and a carefully-constructed description of the problem domain.

In summary, a set of Use Cases is a description of the system to be constructed, the thing to be built, the solution to the problem. But, as Michael Jackson points out, before you can effectively start building the solution to a problem, "first you must concentrate your attention on the application domain to learn what the problem is about." [Jackson, 1995, p. 158]

Several of the ideas in this paper are derived from the works of Michael Jackson, and from Ben Kovitz's short discussion of Use Cases in Practical Software Requirements (pp. 251-252). I hope that Ben and Michael will consider theft the sincerest form of flattery. Neither, of course, is responsible for any mistakes that I might have made in presenting their ideas, or for cases in which my opinion or terminology differs from theirs.

[Booch, 1998] Grady Booch, Best of Booch (ed. Ed Eykholt), Cambridge University Press, 1998

[Jackson, 1983] Michael Jackson, System Development, ACM Press, 1983

[Jackson, 1995] Michael Jackson, Software Requirements and Specifications, Addison-Wesley, 1995

[Jackson, 2000] Michael Jackson, "The Real World" in Millennial Perspectives in Computer Science: Proceedings of the 1999 Oxford-Microsoft Symposium in Honour of Sir Anthony Hoare (ed. Jim Davies, Bill Roscoe, Jim Woodcock), Palgrave, 2000

[Kovitz, 1999] Benjamin L. Kovitz, Practical Software Requirements, 1999